HW8. Panoramic Stiching

Topics: ORB features, Homography, RANSAC(RAndom SAmple Consensus)

Problem: Panoramic Stiching

Brief Overview

In this problem we will develop an algorithm for stitching a panorama from overlapping photos, which amounts to estimating a transformation that aligns one image to another. To do this, we will compute ORB features1 in both images and match them to obtain correspondences. We will then estimate a homography from these correspondences, and we’ll use it to stitch the two images together in a common coordinate system.

In order to get an accurate transformation, we will need many accurate feature matches. Unfortunately, feature matching is a noisy process: even if two image patches (and their ORB descriptors) look alike, they may not be an actual match.

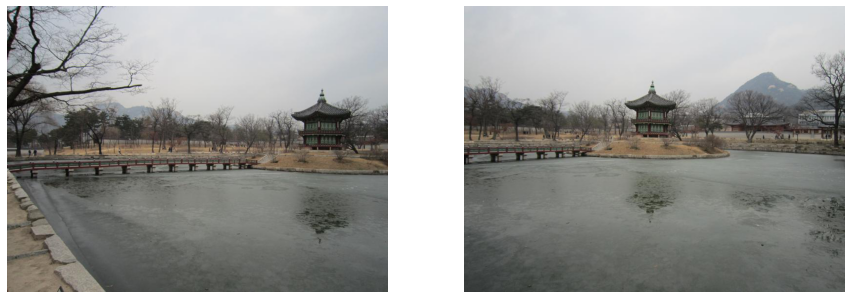

To make our algorithm robust to matching errors, we will use RANSAC, a method for estimating a parametric model from noisy observations. We will detect keypoints and represent descriptors using ORB. We will then match features, using heuristics to remove bad matches. We have provided you with two images (Figure 1) that you’ll use to create a panorama.

The panoramic stitching algorithm consists of four main steps which we ask you to implement in individual functions:

-

Detect keypoints and extract local invariant descriptors (we will be using ORB) from two input images.

-

Match the descriptors between the two images.

-

Apply RANSAC to estimate a homography matrix between the extracted features.

-

Apply a perspective transformation using the homography matrix to merge image into a panorama.

Functions to implement (refer to function comments for more detail):

-

get_orb_features(2 points) -

match_keypoints(2 points) -

find_homography(2 points) -

transform_ransac(2 points) -

panoramic_stitching(2 points)

Getting started

Run the following code to import the modules you’ll need.

%matplotlib inline

import cv2

import random

import numpy as np

import matplotlib.pyplot as plt

from google.colab.patches import cv2_imshow

%%capture

! wget -O img1.jpg "https://drive.google.com/uc?export=download&id=1omMydL6ADxq_vW5gl_1EFhdzT9kaMhUt"

! wget -O img2.jpg "https://drive.google.com/uc?export=download&id=12lxB1ArAlwGn97XgBgt-SFyjE7udMGvf"

Visualize Input Images

img1 = plt.imread('img1.jpg')

img2 = plt.imread('img2.jpg')

def plot_imgs(img1, img2):

fig, ax = plt.subplots(1, 2, figsize=(15, 20))

for a in ax:

a.set_axis_off()

ax[0].imshow(img1)

ax[1].imshow(img2)

plot_imgs(img1, img2)

(a) Feature Extraction

You will start by computing features and image correspondences.

(a)-(i) Compute ORB Features

Implement get orb features(img) to compute orb features for both of the given image.

def get_orb_features(img):

'''

Compute ORB features using cv2 library functions.

Use default parameters when computing the keypoints.

Hint: you will need cv2.ORB_create() and some related functions

Input:

img: cv2 image

Returns:

keypoints: a list of cv2 keypoints

descriptors: a list of ORB descriptors

'''

#############################################################################

# TODO #

#############################################################################

orb = cv2.ORB_create()

keypoints, descriptors = orb.detectAndCompute(img, None)

#############################################################################

# END OF YOUR CODE #

#############################################################################

return keypoints, descriptors

(a)-(ii) Match Keypoints

Implement match keypoints(desc1, desc2) to compute keypoint correspondences between the two source images using the ratio test. Run the plotting code to visualize the detected features and resulting correspondences.

def match_keypoints(desc_1, desc_2, ratio=0.75):

'''

Compute matches between feature descriptors of two images using

Lowe's ratio test. You may use cv2 library functions.

Hint: you may need to use cv2.DescriptorMatcher_create or cv2.BFMatcher

and some related functions

Input:

desc_1, desc_2: list of feature descriptors

Return:

matches: list of feature matches

'''

#############################################################################

# TODO #

#############################################################################

matcher = cv2.DescriptorMatcher_create("BruteForce")

matches_all = matcher.knnMatch(desc_1, desc_2, 2)

matches = []

for match in matches_all:

if (match[0].distance < ratio * match[1].distance):

matches.append(match[0])

#############################################################################

# END OF YOUR CODE #

#############################################################################

return matches

kp_1, desc_1 = get_orb_features(img1)

kp_2, desc_2 = get_orb_features(img2)

kp_img1 = cv2.drawKeypoints(img1, kp_1, None, color=(0,255,0), flags=0)

kp_img2 = cv2.drawKeypoints(img2, kp_2, None, color=(0,255,0), flags=0)

print('keypoints for img1 and img2')

plot_imgs(kp_img1, kp_img2)

keypoints for img1 and img2

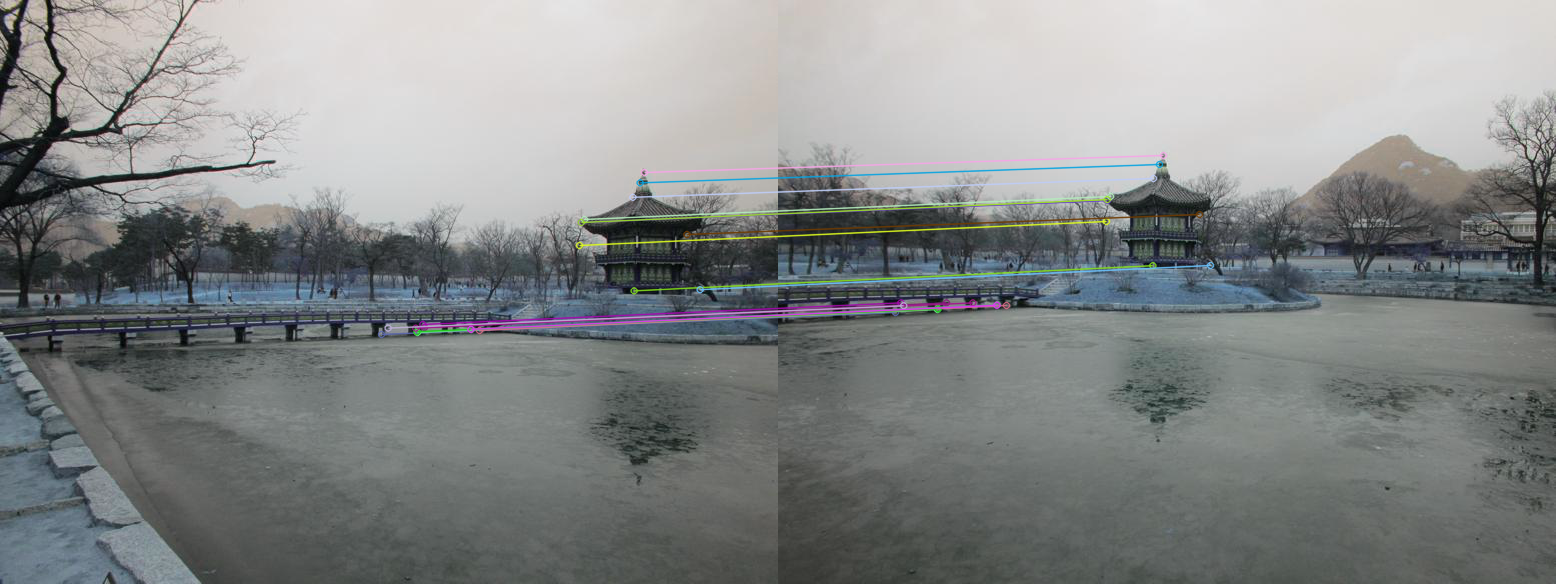

matches = match_keypoints(desc_1, desc_2)

match_plot = cv2.drawMatches(img1, kp_1, img2, kp_2, matches[:20], None, flags=2)

print("orb feature matches")

cv2_imshow(match_plot)

orb feature matches

(b) Find Homography Matrix

Write a function find homography(pts1, pts2) that takes in two N × 2 matrices with the x and y coordinates of matching 2D points in the two images and computes the 3 × 3 homography H that maps pts1 to pts2. You can implement this function using nonlinear least squares (or, alternatively, the direct linear transform).

- Define a cost function, f(h;pts1,pts2), that calculates the projection error (a vector of length 2N) between pts1 and projected pts2 using homography H as pts1 − cart(H ∗ homog(pts2)). Here homog(x) converts x into homogeneous coordinates, cart(x) converts x to cartesian coordinates and h is the flattened version of the homography H to be estimated.

- Provide an initial guess for the homography h: a length 9 vector filled with ‘1’s should be good enough.

from scipy.optimize import least_squares

def homog(x):

return np.concatenate((x, np.ones((x.shape[0], 1))), axis = 1)

def cart(x):

return x[:,:2] / x[:, 2].reshape((x.shape[0], 1))

def find_homography(pts_1, pts_2):

'''

Use either nonlinear least squares or direct linear transform

to find a homography that estimates the transformation mapping from pts_1

to pts_2.

e.g. If x is in pts_1 and y is in pts_2, then y = H * x

Hint if using nonlinear least square:

The objective function to optimize here is:

||pts_1 - cart(H*homog(pts_2))||^2 where homog(x) converts x into

homogeneous coordinates and cart(x) converts x to cartesian coordinates.

You can use scipy.optimize.least_squares for this.

Hint if using direct linear transform:

The solution is given by the right-singular vector with the smallest singular value in the singular vector decomposition.

You can use np.linalg.svd for this.

Input:

pts_1, pts_2: (N, 2) matrix

Return:

H: the resultant homography matrix (3 x 3)

'''

#############################################################################

# TODO #

#############################################################################

def cost_function(h):

H = np.reshape(h, (3, 3))

return (pts_1 - cart(np.transpose(np.matmul(H, np.transpose(homog(pts_2)))))).flatten()

h = np.ones(9)

H = least_squares(cost_function, h).x.reshape((3, 3))

#############################################################################

# END OF YOUR CODE #

#############################################################################

return H

(c) Implement RANSAC

Your homography-fitting function from (b) will only work well if there are no mismatched features. To make it more robust, implement a function transform ransac(pts1, pts2) that fits a homography using RANSAC. You can call find homography(pts1, pts2) inside the inner loop of RANSAC. You will also be responsible for figuring out the set of parameters to use to produce the best results.

from numpy import linalg as LA

def transform_ransac(pts_1, pts_2, verbose=False):

'''

Implements RANSAC to estimate homography matrix.

Hint: Follow the RANSAC steps outlined in the lecture slides.

Hint: Try num iterations = 1000 (not mandatory)

Hint: Threshold ε =2 (ε here refers to the L2 distance between two points)

Input:

pts_1, pts_2: (N, 2) matrices

Return:

best_model: homography matrix with most inliers

'''

#############################################################################

# TODO #

#############################################################################

NUM_ITERATION = 1000

THRESHOLD = 2

best_num_inliers = 0

best_inliers_mask = None

for i in range(NUM_ITERATION):

sample_idx = np.random.choice(pts_1.shape[0], 4, replace = False)

H = find_homography(pts_1[sample_idx], pts_2[sample_idx])

dist = LA.norm(pts_1 - cart(np.transpose(np.matmul(H, np.transpose(homog(pts_2))))), axis = 1)

inliers_mask = (dist < THRESHOLD)

num_inliers = np.sum(inliers_mask)

if num_inliers > best_num_inliers:

best_num_inliers = num_inliers

best_inliers_mask = inliers_mask

best_model = find_homography(pts_1[best_inliers_mask], pts_2[best_inliers_mask])

#############################################################################

# END OF YOUR CODE #

#############################################################################

return best_model

(d) Panoramic Stitching

Write a function panoramic stitching(img1, img2) that produces a panorama from a pair of overlapping images using your functions from the previous parts. Run the algorithm on the two images provided. You result should be similar to that of Figure 1.

def panoramic_stitching(img1, img2):

'''

Given a pair of overlapping images, generate a panoramic image.

Hint: use the functions that you've written in the previous parts.

Input:

img1, img2: cv2 images

Return:

final_img: cv2 image of panorama

'''

#############################################################################

# TODO #

# 1. detect keypoints and extract orb feature descriptors #

# 2. match features between two images #

# 3. compute homography matrix H transforming points from pts_2 to pts_1. #

# Note the order here (not pts_1 to pts_2)! #

#############################################################################

kp_1, desc_1 = get_orb_features(img1)

kp_2, desc_2 = get_orb_features(img2)

matches = match_keypoints(desc_1, desc_2)

pts_1 = []

pts_2 = []

for match in matches:

pts_1.append(kp_1[match.queryIdx].pt)

pts_2.append(kp_2[match.trainIdx].pt)

pts_1 = np.array(pts_1)

pts_2 = np.array(pts_2)

H = transform_ransac(pts_1, pts_2)

#############################################################################

# END OF YOUR CODE #

#############################################################################

# apply perspective wrap to stitch images together

final_img = cv2.warpPerspective(img2, H, (img2.shape[1] + img1.shape[1], img2.shape[0] * 2))

final_img[0:img1.shape[0], 0:img1.shape[1]] = img1

return final_img

result = panoramic_stitching(img1, img2)

cv2_imshow(result)